Modern software applications are expected to be fast, scalable, and always available. To meet these demands, many organizations have shifted from building large, monolithic systems to adopting microservices architecture. When combined with Kubernetes, a powerful container orchestration platform, microservices become easier to manage and scale. Understanding how these two technologies work together helps businesses build resilient applications that can grow with user demand.

TLDR: Microservices architecture breaks applications into small, independent services that work together. Kubernetes is a platform that automates the deployment, scaling, and management of these services using containers. Together, they create flexible, scalable, and reliable systems. This combination allows teams to deploy faster, recover from failures quickly, and scale specific parts of an application as needed.

What Is Microservices Architecture?

In traditional monolithic architecture, an application is built as a single, unified unit. All components—such as the user interface, business logic, and database access—are tightly connected. While this approach can work for small applications, it becomes difficult to maintain and scale as the system grows.

Microservices architecture takes a different approach. It breaks the application into small, independent services. Each service focuses on one specific business capability and operates independently. These services communicate with each other through lightweight APIs, often using HTTP or messaging queues.

For example, an e-commerce application might include:

- A User Service for managing accounts

- A Product Service for catalog management

- An Order Service for handling purchases

- A Payment Service for transactions

Each service can be developed, deployed, and scaled independently. If the payment system needs extra resources during peak traffic, only that service needs to scale—not the entire application.

Key Benefits of Microservices

Organizations adopt microservices architecture for several important reasons:

- Independent Deployment: Teams can update one service without redeploying the entire application.

- Scalability: Individual services scale based on demand.

- Fault Isolation: If one service fails, others can continue operating.

- Technology Flexibility: Different services can use different programming languages or databases.

However, microservices also introduce complexity. Managing many small services requires strong orchestration, monitoring, and networking capabilities. This is where Kubernetes plays a crucial role.

Understanding Containers

Before diving into Kubernetes, it is important to understand containers. Containers package an application and all its dependencies into a lightweight, portable unit. Unlike traditional virtual machines, containers share the host operating system, making them faster and more efficient.

Docker is one of the most widely used tools for creating and running containers. In a microservices setup, each service typically runs inside its own container. This ensures consistency across development, testing, and production environments.

Containers solve the problem of “it works on my machine” by ensuring that software runs the same way everywhere.

What Is Kubernetes?

Kubernetes is an open-source platform designed to automate the deployment, scaling, and management of containerized applications. Originally developed by Google, it is now maintained by the Cloud Native Computing Foundation.

In simple terms, Kubernetes acts like a smart manager for containers. It ensures that the right number of containers are running, replaces failed ones, distributes traffic, and scales services up or down based on demand.

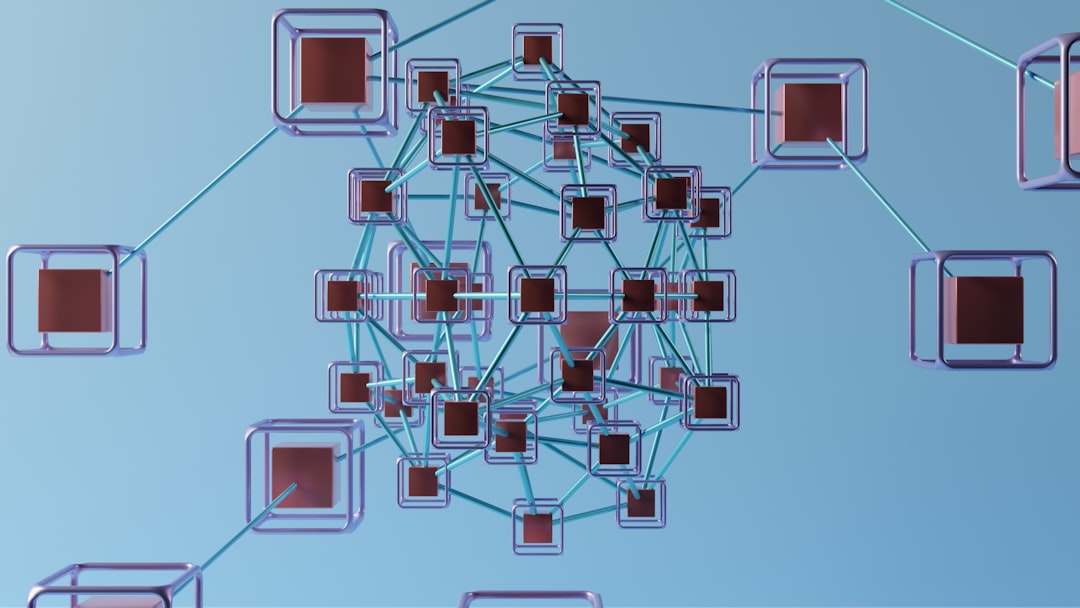

Core Kubernetes Concepts Explained Simply

To understand how Kubernetes supports microservices, it helps to break down a few basic concepts:

- Cluster: A group of machines (nodes) that run containerized applications.

- Node: A single machine (virtual or physical) inside the cluster.

- Pod: The smallest deployable unit in Kubernetes. A pod usually contains one container.

- Deployment: Defines how many replicas of a pod should run.

- Service: Provides a stable network endpoint for accessing a group of pods.

For example, if an Order Service needs three instances to handle traffic, Kubernetes ensures three pods are running. If one crashes, Kubernetes automatically creates a replacement.

How Microservices and Kubernetes Work Together

Microservices define how an application is structured. Kubernetes defines how it is managed and run. Together, they form a powerful combination.

Here is how the process typically works:

- Developers write each microservice independently.

- Each service is packaged into a container.

- Containers are deployed into a Kubernetes cluster.

- Kubernetes manages scaling, networking, and recovery.

This setup allows teams to:

- Deploy updates without downtime.

- Automatically scale services during traffic spikes.

- Maintain high availability through self-healing mechanisms.

For instance, if a social media application experiences a sudden surge in user uploads, Kubernetes can automatically increase the number of media processing service instances. When traffic decreases, it scales them down, saving resources.

Scaling Made Simple

One of the biggest advantages of Kubernetes in a microservices environment is auto-scaling. Kubernetes can monitor CPU usage or custom metrics and adjust the number of running pods accordingly.

There are two main types of scaling:

- Horizontal Scaling: Adding or removing pod replicas.

- Vertical Scaling: Increasing or decreasing resource limits (CPU, memory).

Horizontal scaling is especially powerful in microservices because each service can scale independently based on its workload.

Load Balancing and Service Discovery

In a distributed microservices system, services must communicate reliably. Kubernetes provides built-in load balancing and service discovery.

When multiple pods run for the same service, Kubernetes automatically distributes traffic evenly among them. It also assigns consistent service names so that services can find and communicate with each other without hard-coded IP addresses.

Resilience and Self-Healing

Failures are inevitable in distributed systems. Servers crash, networks fail, and containers unexpectedly stop. Kubernetes is designed with self-healing capabilities.

If a container stops responding, Kubernetes:

- Restarts the container

- Replaces it if necessary

- Shifts traffic to healthy instances

This automatic recovery significantly improves system reliability and reduces manual intervention.

Challenges to Consider

Although microservices with Kubernetes offer many benefits, they also introduce complexity. Teams must manage:

- Service communication and networking

- Observability (logging and monitoring)

- Security configurations

- Container image management

Additionally, debugging distributed systems can be more challenging than troubleshooting a monolithic application. Organizations often adopt tools like Prometheus for monitoring and Grafana for visualization to maintain visibility into system performance.

When Should Organizations Use Microservices with Kubernetes?

This architecture is especially beneficial when:

- The application is large and complex

- Multiple teams work on different features

- High scalability is required

- Continuous deployment is essential

For small projects, a monolithic approach may still be sufficient. However, as systems grow, the flexibility of microservices combined with Kubernetes often becomes invaluable.

Conclusion

Microservices architecture and Kubernetes form a powerful partnership in modern software development. Microservices allow applications to be broken down into manageable, independent components. Kubernetes provides the automation needed to deploy, scale, and maintain those components effectively.

By combining these technologies, organizations gain flexibility, resilience, and scalability. Although the learning curve can be steep, the long-term benefits often outweigh the challenges. As cloud-native development continues to evolve, microservices and Kubernetes remain at the forefront of building reliable, future-ready applications.

Frequently Asked Questions (FAQ)

-

1. What is the main difference between monolithic and microservices architecture?

A monolithic architecture combines all components into a single unit, while microservices split the application into smaller, independent services that communicate through APIs. -

2. Is Kubernetes required for microservices?

No, but Kubernetes greatly simplifies the deployment and management of microservices, especially at scale. -

3. Can microservices run without containers?

Yes, but containers make deployment more consistent and efficient. Most modern microservices implementations use containers. -

4. What problems does Kubernetes solve?

Kubernetes handles container orchestration tasks such as scaling, load balancing, self-healing, and automated rollouts. -

5. Are microservices always better than monolithic architecture?

Not always. Microservices are ideal for complex, scalable systems, but smaller applications may function well as monoliths. -

6. What skills are needed to manage Kubernetes?

Teams typically need knowledge of containers, networking, cloud infrastructure, and monitoring tools to effectively manage Kubernetes environments.